In this series, I explore the history of information security or what later became the history of cybersecurity. These posts are the English translations of the podcast episodes that will be published alongside this, but (unfortunately) in German. In this blog post, I will take a look at the history of IT security in the 1960s, when a lot began to change. We will start with the most important technical changes and inventions of that decade, then move on to Chapter 2 to examine the changes in computing practice that these technologies brought about. In Chapter 3, we will explore new threats and vulnerabilities such as „phone phreaking,“ and in Chapter 4, we will discuss the new security controls that became necessary due to the technical and social changes of that time. If you haven’t read the previous post covering the 1940s and 1950s, please check it out here.

1. Innovations: Mini Computer, Games & digital bookkeeping

In the early 1960s, computers were still quite expensive, and their utility, especially in businesses, was very limited. However, throughout the 1960s, the costs slowly went down. In 1960, the DEC PDP1 was released, a so-called mini computer. The PDP1 is considered to be one of the most important computers during the early days of hacker culture because it was relatively small, relatively inexpensive, and quite capable. It weighed only 730 kg and cost only $120,000 at its introduction, which is equivalent to about $1.2 million today. Compared to today, it was still a huge computer, akin to welding 2–3 refrigerators together. That is American refrigerators, not German ones. The introductory price was still high, and only 59 units were sold, mainly to universities in the USA. The advent of minicomputers provided university students access to computers. Many students and graduates in IT departments and computer science programs could now program applications on them, such as the first music applications or games. In 1962, MIT received the first PDP1. Students Steve Russell, Martin Graetz, and Wayne Wiitanen programmed one of the first video games in history, Spacewar, on this PDP1. This game could also be played in multiplayer mode, thanks to the PDP1’s cathode-ray tube monitor. Other fun things were also done with the PDP1, such as music applications that could play Bach and Beethoven. During the 1960s, Computers were becoming smaller, cheaper, and more accessible to a wider audience. By the end of the decade, in 1968, computers had been miniaturized to the extent that they could even be sent to the moon. The guidance computer for the Apollo mission was shrunk from the size of several refrigerators to the size of a briefcase, weighing only about 32 kg.

Computers became more flexible and powerful, too. Vacuum tubes were largely replaced by integrated circuits, dramatically increasing the speed of computers. For instance, in 1961, the IBM 1400 Series was introduced, running at a clock speed of 87 Kiloherz. It was one of the first general-purpose computers that were not specialized for a single application but could perform many different tasks. The sixties saw an increase in „removable storage devices,“ and data was increasingly written and stored on magnetic tapes instead of punch cards, making computer programs faster and more powerful. New, simpler programming languages such as COBOL (1960) and BASIC (1964) were introduced, making it easier to program these new general-purpose computers. Previously, computers were primarily programmed in machine or assembler language, which was cumbersome. BASIC was invented to teach students in the UK how to program, so it was designed to be relatively simple.

These cheaper, smaller, faster, and more flexible computers were increasingly making their way into the business world. This accelerated the process of digitization, which involves transforming physical functions and processes into digital ones. One of the first things to be digitized in many companies was accounting. In 1964, American Airlines introduced digital flight bookings. This was made possible by the SABRE Travel Reservation System, which enabled digital flight reservations via IBM 7090 mainframes. It’s difficult to imagine now, but back in the day, entire flight logistics were managed on paper, using cardboard cards that contained booking numbers and passenger data for each flight. These cardboard cards had to be created, maintained, and copied to be used on the aircraft. The entire process required 8 human operators per flight, handling these cards. Per flight! This eventually became unfeasible as the number of flights and passengers dramatically increased with the commercialization of aviation after World War II. There was tremendous pressure to digitize this process. In the 1960s, for the first time, assembly-line robot arms were mass-produced, controlled by computers, and took on tasks in industrial production, for example, in General Motors factories.

In the late 1960s, networks emerged as a new function and killer app for computers. As computer centers were being established everywhere at universities and computers were being purchased, it was a logical idea to interconnect them. In 1966, the Carterfone was invented, which is essentially an early version of an acoustic coupler that allows bits, in the form of tones of a specific frequency, to be sent over the telephone network, i.e., landlines. This was a game-changer, as it enabled computer instructions to be sent over the telephone network. This allowed computers to communicate with each other, even if they were not in the same building complex (as was usually the case back in the day). The telephone companies did not find this amusing and sued, but they lost, and thus, could no longer dictate what traveled over their lines, phone calls, or data. The principle of net neutrality was born. The first commercial modems led to the popularization of network connections. A modem is a modulator/demodulator, i.e., it converts bits into acoustic signals and vice versa.

This telephone network was also used in 1969 to establish the first Internet connection. Although that’s not entirely accurate. In 1969, for the first time, 4 computers on the early ARPANET, the precursor to the internet, communicated together and sent data over the telephone network. This involved a log-in command via the TELNET protocol, which still exists today and creates many IT security headaches due to its clear-text transmissions. However, security was not a design principle of ARPANET. At that time, a different communication protocol, the Network Control Protocol, was used instead of TCP/IP (which was not yet invented). In addition to the ARPANET, other network prototypes were popping up all over the world, such as the ALOHA Net in Hawaii, CYCLADES in France, and the National Physics Laboratory Network in the UK.

2. Context & computer operations: Computer Priesthood & the time-sharing revolution

Miniaturization, increased computing power, flexibility, and computer networks were also changing the way computers were being used. Many organizations, universities, companies, etc., were beginning to establish computer centers. These centers mostly housed the refrigerator-sized computers, sometimes several of them. The support devices such as magnetic storage media, printers, and modems were also not much smaller than cabinets and were also found in these centers. This is familiar from old movies, where entire walls are filled with rotating magnetic tapes, and blinking lights in beige-colored wall cabinets.

This led to the development of administrative structures to manage and maintain these devices. There was usually an IT team that had sole control over these devices and also determined who had access to them. Individual or personal computing, as we know it today, did not exist yet. There were rules for how and for what purposes computers could be used, namely for „serious matters.“ Fun and recreation were not welcome, as expensive devices were primarily purchased for scientific applications or accounting. This gave rise to what Steven Levy in his book Hackers refers to as a kind of „computer priesthood.“

“All these people in charge of punching cards, feeding them into readers, and pressing buttons and switches on the machine were what was commonly called a Priesthood, and those privileged enough to submit data to those most holy priests were the official acolytes. It was an almost ritualistic exchange.” […]

“It went hand in hand with the stifling proliferation of goddamn RULES that permeated the atmosphere of the computation center. Most of the rules were designed to keep crazy young computer fans like Samson and Kotok and Saunders physically distant from the machine itself. The most rigid rule of all was that no one should be able to actually touch or tamper with the machine itself. This, of course, was what those Signals and Power people were dying to do more than anything else in the world, and the restrictions drove them mad.”

Steven Levy, Hackers. Heroes of the Computer Revolution, https://www.gutenberg.org/cache/epub/729/pg729-images.html

This nicely describes the organizational processes and policies that determined computer usage in computer centers at that time. However, this began to change in the 1960s. In 1961 the Compatible Time Sharing System was presented at the MIT Computation Center. Time-sharing means sharing computer time: since the 1940s and 1950s, computing time was limited per user per day. Since computers could only monotask, this made a lot of sense. Computer time had to be reserved and registered in advance at the computer center. The new time-sharing system developed at MIT was essentially an operating system with corresponding hardware that allowed multiple users to access a computer simultaneously and perform calculations in parallel. The system, invented by Fernando Corbató and his team, theoretically supported up to 100 users and ran on an IBM 7090. Each user was given a terminal, i.e., an input device, with a „Flexowriter,“ i.e., a gigantic keyboard, a „tape unit“ for data storage, and later, a screen.

The terminal itself did not perform any calculations but was only used to send instructions to the central computer. The central computer then performed the calculations, and the results were sent back to the terminal and stored on the tape drive or displayed on the screen. This gradually replaced the use of computers with punch cards. By the end of the 1960s, terminals were largely established as the standard input-output method. Today, one would probably call this a „thin client.“ These terminals allowed multiple people to use a computer simultaneously, in contrast to „batch processing,“ where programs were processed serially, i.e., one after the other.

In 1965, multiprogramming and buffering techniques were introduced, allowing multiple users to program a computer and better distribute computing cycles among the users. This led to a more efficient use of computer time: the computer could be constantly fed with processes and did not have to idle. This meant a significant increase in efficiency, as there were no longer any unused computing cycles. Therefore, „multiprogramming“ and „time-sharing“ represent an efficient use of still expensive computers. This also reduced the relevance of the gatekeeping elite of the „computer priesthood,“ as the IT team could no longer control every programming code that was entered into a machine.

3 Threats: the computer security problem and hacker culture emerge

Time-sharing solved the problem of inefficient computer usage and allowed many more people to access computers, bypassing the central priesthood. However, it created new problems in the history of cybersecurity. One of them was that the complexity of software increased. Through „buffering,“ „time-sharing,“ „multiprogramming“ and easier programming languages, programs got longer. Due to these technologies, programs began to interact with each other. But if one program depends on the results of another, the complexity naturally increases. This was not possible before. With the precursor of „batch processing“, there was only „one program at a time.“ This is difficult to imagine today: in my Windows 11 Task Manager, there are currently running 123 processes simultaneously, just for comparison. The simultaneous processing of multiple processes initiated by multiple users on the same computer, created „the computer security problem“, as it is called in the literature. In other words, THE computer security problem par excellence, the root problem that we still have today: “With multiprogramming, the confidentiality, integrity, and availability of one program could be under attack by an arbitrary concurrently running program” (Meijer/Hoepman/Jacobs/Poll 2007).

How do I prevent one program from conflicting with another, or one program being intentionally manipulated by another one? The possibility that a program on a computer could read, steal, or manipulate data from another program was a theoretical problem until 1961. Then it became practical. In August 1961, a program called Darwin, subtitled „a game of survival and hopefully evolution,“ was developed at Bell Labs. The program was written by Victor Vyssotsky and Robert Morris Sr. – remember the latter name, as it will become important later.

“The game consisted of a program called the umpire and a designated section of the computer’s memory known as the arena, into which two or more small programs, written by the players, were loaded. The programs were written in 7090 machine code, and could call a number of functions provided by the umpire in order to probe other locations within the arena, kill opposing programs, and claim vacant memory for copies of themselves. The game ended after a set amount of time, or when copies of only one program remained alive. The player who wrote the last surviving program was declared winner.” [Wikipedia]

The concept of malware was invented with the game Darwin, somewhat as a proof of concept. Malware refers to programs that influence or sabotage the operation of other programs, thus producing harmful effects. It is unclear how many people were aware of this game, as it was developed at Bell Labs and only ran on the IBM 7090. Therefore, it is rather unlikely that this knowledge became widespread then. However, the underlying problem became the topic of IT security in the 1960s and remains so today. In 1967, a conference dedicated solely to this problem was held in Fort Meade, Maryland, the location of the NSA, which was likely involved in the conference.

Willis Ware was one of the individuals who dealt with this issue. He studied the problem and also wrote a report for the RAND Corporation, which is still highly readable today. In it, he stated that time-sharing brought about a „sea change“ for computer security. He argued that the programs of that time were not developed to deal with the security issues introduced by time-sharing. Programs hijacking, one another simply wasn’t a possibility before. The issue became apparent in security-sensitive organizations such as the Department of Defense. For example, if I’m processing classified data with a program, such as ballistic calculations for nuclear weapon delivery systems, another program can read the data from memory. Time-sharing systems of that time were unable to securely separate the data of different users and processes. Additionally, different users with different security clearances often worked in parallel on the same computer, meaning there was a risk that someone with a lower security clearance could accidentally or intentionally read data from a program that was processing higher classified data. Also, the read and write permissions between different users were not separated from each other. It was quite common for data in one process to overwrite the data from another process in RAM, accidentally. Another problem was that an error in one program, such as a crash, would also crash other programs and processes running at the same time. There was no isolation of the programs. To solve these problems, Willis Ware proposed building a monitoring instance into the operating system for the different processes, which would isolate the processes from each other. This monitor also would ensure that only those who have authorization would receive read and write permissions for their programs. The monitor must track which users a system has and which program accesses these users are allowed. This is what we call „rights management“ today.

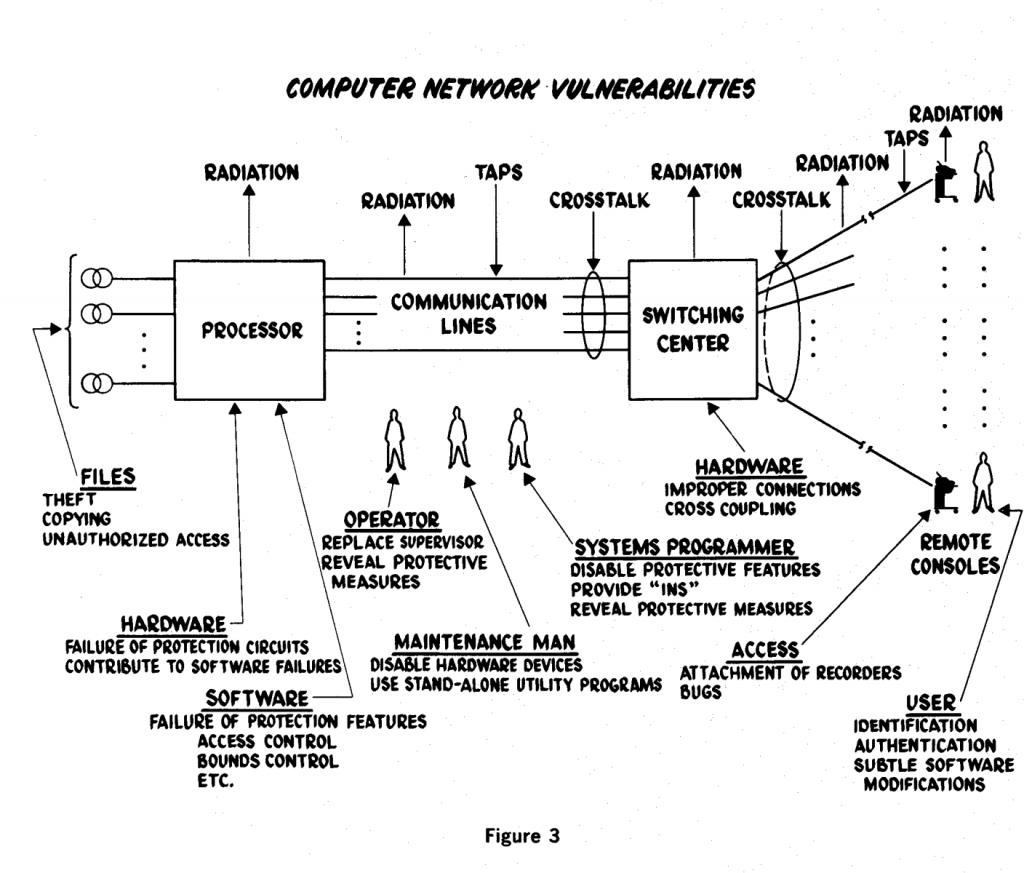

By the end of the 1960s, several working groups were dealing with this theoretical problem of computer insecurity, the lack of isolation between programs and processes. These groups then presented their reports in the 1970s. Governments now also become more involved in the history of cybersecurity. More on this in the next blog post. Let’s recap the security situation at the end of the 1960s: We have the problem of vulnerability of software that was written completely without a concept of security in mind, we have the problem of non-separated processes and permissions on multi-user systems, and we have increasingly complex software. A storm was slowly brewing. To become a threat, an IT risk requires not only a vulnerability, but also a threat actor who can exploit the vulnerability, and luckily for us these also emerged in the 1960s.

In the 1960s, the practice of hacking began and the hacker culture emerged. This was due to a combination of several factors: computers were becoming smaller and more affordable, allowing more people to access them. This sparked the creativity of these individuals to develop applications that had not been previously considered or were not allowed, such as games. Hacking originally meant the creative use of a device for something it was not intended for. It also meant experimenting to understand, test, and modify how a device works. The hacker culture emerged from a fusion of intellectual curiosity, the counter-culture/hippie movement, and the desire to have access to technology. It was essentially the logical counterpart to the computer priesthood, with its “ideology” of centralized and limited access to computer resources. The hacker culture was a form of protest against the trend of centralizing everything, making it proprietary, and hiding the functioning of things from people, as Steven Levy writes. This is the original meaning of hacking as it emerged in the 1960s at universities.

This is most reflected today in the concept of „life hacks,“ which are clever tricks or shortcuts, or even a „prank“ to make life a little easier. The dark, more negative meaning of hacking, as we know it today, represented by black hoodies and cybercrime, only emerged in the 1980s and 1990s.

The previously mentioned PDP1 was one of the first computers that early hackers used. The student newspaper „The Tech“ at MIT describes how a PDP1 was reprogrammed by members of the so-called „Tech Model Railroad Club“ to make free phone calls. This brings us to phone phreaking, one of the first famous hacking practices. I already mentioned modems and acoustic coupler technology that converted bits into acoustic whistle signals. Some of us older people still remember this from 56k modems and the shrill noises they made. Signals of a certain pitch represent input commands such as „connect“ or something like „end connection.“

Inserting forged signals with the right frequency allowed cost-free phone calls. For the younger ones among us: phone calls used to cost money. The further away the recipient and the longer the call, the more expensive it was. Some people could whistle at a perfect 2,600 MHz pitch, for example, Joe Engressia (also known as Joybubbles), who became known as the „whistling phreaker.“ John Draper, a friend of Engressia, discovered that a children’s whistle in a box of Captain Crunch cereal emitted a perfect 2,600 MHz pitch, which earned him the nickname „Captain Crunch.“ Later, this phreaking was automated with so-called Blue Boxes.

Blue boxes were self-made transmitters that gave the user access to the same 12 tones used by telephone operators, as described in the Bell System Technical Journal (1954 and 1960). Steve Jobs‘ first business model was not the Apple 1, but the selling blue boxes. Early phreakers were known for examining trash cans in front of telephone company offices and other places to find discarded manuals or devices. The still relevant hacking practice of the dumpster diving attack was invented. As a result, MIT felt compelled to stop its telephone services because hackers were making long-distance calls and passing on the costs, for example, to nearby US military facilities.

4. Security Controls: Backups, Continuity Management and Passwords

As we have seen, new vulnerabilities and threats emerged in the 1960s. What was done to address these? What IT security controls were introduced? Well, not much because it wasn’t seen as a big issue. Early hacking was not conceived to be a crime, but more like a prank and mostly seen as isolated incidents. Computer security became more challenging in the 1960s due to the proliferation of minicomputers. They appeared in many open places, and the physical security measures used in the 1940s and 1950s became more difficult to implement. Computers were no longer only found in isolated military bases surrounded by barbed wire, guarded by sentries, and locked behind security doors. They were now in university computer centers and businesses. People without security clearances and background screenings, such as students, gained access to these devices. Through time-sharing, more people could interact with the same system. This made physical security increasingly difficult. Efforts were made to continue implementing physical security measures: access to IT centers was controlled and regulated. Only enrolled students on access lists were allowed into the rooms, and only the IT priesthood could open the machines and tinker with them. The first IT security policies also emerged.

In the 1960s, the first backup policies and continuity of operation plans were introduced. The main problem for computer centers at that time was continuous availability, meaning that systems should not crash, and if they did, which was often the case, they should be quickly restarted to avoid wasting computing time. This was often caused by power outages, burnt-out tubes, and poorly written programs. In response, the concept of redundant power supplies and hardware emerged (i.e., keeping spare tubes and systems, if affordable). Crashes at that time usually led to data loss. Therefore, data availability suddenly became important. Backup and recovery procedures for computers became an important topic for computer center management. An important measure to ensure continuity was to create copies of the master files from hard drives on magnetic tapes. Sometimes, the input and output data of the programs were also copied onto tapes. Backups were usually made in 24-hour-, weekly-, or even longer cycles. The backup tapes were stored in safes at a distance from the computer center. This is where the concept of off-site backups emerged, which is important if, for example, the data center burned down or was flooded. The physical security of the tape safe in the computer center and the procedures to access this safe naturally led to the creation of access control policies. In sum, we can see the early origins of the various IT security controls still used: technical measures, organizational measures (access control), and processes and policies (backup & continuity planning) working in tandem.

Finally, a technical security control that was invented in 1961 should be mentioned. The team of Fernando Corbató, who invented time-sharing, developed the first digital password to control access to a time-sharing computer per user. Love it or hate it, passwords have been a part of the history of cybersecurity for over 60 years now and have caused many headaches sinces.

Sources

- Computer History Timeline, https://www.computerhistory.org/timeline/1947/

- Meijer/Hoepman/Jacobs/Poll (2007), Computer Security Through Correctness and Transparency, in: de Leeuw/Bergstra, The History of Information Security: A Comprehensive Handbook, Elsevier.

- Yost (2007), A History of Computer Security Standards, in: de Leeuw/Bergstra, The History of Information Security: A Comprehensive Handbook, Elsevier.

- DeNardis (2007), A History of Internet Security, in: de Leeuw/Bergstra, The History of Information Security: A Comprehensive Handbook, Elsevier.

- Brenner (2007), History of Computer Crime, in: de Leeuw/Bergstra, The History of Information Security: A Comprehensive Handbook, Elsevier.

- Biene-Hershey (2007), IT Security and IT Auditing Between 1960 and 2000, in: de Leeuw/Bergstra, The History of Information Security: A Comprehensive Handbook, Elsevier.

- PDP-1 – Wikipedia

- Apollo Guidance Computer – Wikipedia

- Sabre (travel reservation system) – Wikipedia

- https://www.automate.org/robotics/engelberger/joseph-engelberger-unimate

- Levy, Hackers. Heroes of the Computer Revolution, https://www.gutenberg.org/cache/epub/729/pg729-images.html

- https://en.wikipedia.org/wiki/Darwin_(programming_game)